Hadoop vs Snowflake

July 21, 2023 | Author: Michael Stromann

18

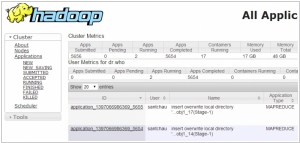

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-availability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-available service on top of a cluster of computers, each of which may be prone to failures.

See also:

Top 10 Big Data platforms

Top 10 Big Data platforms

Hadoop and Snowflake are both prominent data processing and storage solutions, but they have distinct differences that cater to different user needs. Hadoop is an open-source distributed computing framework that enables distributed processing of large datasets across clusters of commodity hardware. It consists of Hadoop Distributed File System (HDFS) for data storage and MapReduce for processing data in parallel. Hadoop requires users to set up and manage clusters manually, making it more suitable for organizations with specific infrastructure requirements and technical expertise. On the other hand, Snowflake is a cloud-based data warehousing platform that specializes in data storage, processing, and querying. Snowflake's architecture allows it to handle large-scale data storage and retrieval efficiently, making it an ideal choice for businesses with heavy data warehousing requirements. Snowflake's separation of compute and storage resources enables organizations to scale their data processing independently, and its fully managed service eliminates the need for manual cluster management.

See also: Top 10 Big Data platforms

See also: Top 10 Big Data platforms

Hadoop vs Snowflake in our news:

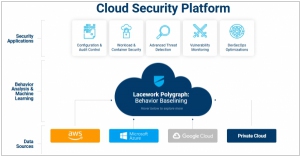

2021. Cloud security startup Lacework has raised $525M

Lacework, a cloud security startup that offers a solution built on the Snowflake platform, has raised $525 million in its Series D funding round, bringing its total funding to over $1 billion. The company reported a remarkable 300% year-over-year revenue growth for the second consecutive year. Investors and customers have shown significant interest due to the comprehensive nature of Lacework's security solution. It enables companies to securely build in the cloud and covers multiple market categories to meet diverse customer needs. This includes services such as configuration and compliance, infrastructure-as-code security, vulnerability scanning during build time and runtime, as well as runtime security for cloud-native environments like Kubernetes and containers.

2014. MapR partners with Teradata to reach enterprise customers

The last remaining independent Hadoop provider, MapR, and the prominent big data analytics provider, Teradata, have joined forces to collaborate on integrating their respective products and developing a unified go-to-market strategy. As part of this partnership, Teradata gains the ability to resell MapR software, professional services, and provide customer support. Essentially, Teradata will act as the primary interface for enterprises that utilize or aspire to use both technologies, serving as the representative for MapR. Previously, Teradata had established a close partnership with Hortonworks, but it now extends its collaboration and analytic market leadership to all three major Hadoop providers. Similarly, earlier this week, HP unveiled Vertica for SQL on Hadoop, enabling users to access and analyze data stored in any of the three primary Hadoop distributions—Hortonworks, MapR, and Cloudera.

2014. HP plugs the Vertica analytics platform into Hadoop

HP has unveiled the introduction of Vertica for SQL on Hadoop, a significant announcement in the world of analytics. With Vertica, customers gain the ability to access and analyze data stored in any of the three primary Hadoop distributions: Hortonworks, MapR, and Cloudera, as well as any combination thereof. Given the uncertainty surrounding the dominance of a particular Hadoop flavor, many large companies opt to utilize all three. HP stands out as one of the pioneering vendors by asserting that "any flavor of Hadoop will do," a sentiment further reinforced by its $50 million investment in Hortonworks, which currently represents the favored Hadoop flavor within HAVEn, HP's analytics stack. HP's announcement not only emphasizes the platform's interoperability but also highlights its capabilities in dealing with data stored in diverse environments such as data lakes or enterprise data hubs. With HP Vertica, organizations gain a seamless solution for exploring and harnessing the value of data stored in the Hadoop Distributed File System (HDFS). The combination of Vertica's power, speed, and scalability with Hadoop's prowess in handling extensive data sets serves as an enticing proposition, potentially motivating hesitant managers to embrace big data initiatives confidently. HP's comprehensive offering provides a compelling avenue for organizations to unlock the potential of their data, urging them to venture beyond their reservations and embrace the world of big data.

2014. Cloudera helps to manage Hadoop on Amazon cloud

Hadoop vendor Cloudera has unveiled a new offering named Director, aimed at simplifying the management of Hadoop clusters on the Amazon Web Services (AWS) cloud. Clarke Patterson, Senior Director of Product Marketing, acknowledged the challenges faced by customers in managing Hadoop clusters while maintaining extensive capabilities. He emphasized that there is no difference between the cloud version and the on-premises version of the software. However, the Director interface has been specifically designed to be self-service, incorporating cloud-specific features like instance-tracking. This enables administrators to monitor the cost associated with each cloud instance, ensuring better cost management.